I’ve spent a lot of time working with Google Compute Engine over this past year, and want to share some of my learnings. For those of you not familiar with it, Google Compute Engine allows developers to create and run virtual machines on Google infrastructure to leverage scale, performance, and value. At Cardinal Path, we use Compute Engine to host individual databases as well as clusters of databases, and we have active ongoing builds using Ubuntu, Debian, Windows or other standard images. While we operate both Amazon Web Services & Google Compute Engine (GCE) stacks, we have enjoyed using GCE because of its built-in load balancing service to distribute heavy workloads across many virtual machines, its ability to automatically scale virtual machines in times of heavy or low traffic (which means our sites and analytics never drop on Christmas day), as well as the ability to access our virtual machine instances through the Google Developers Console, RESTful API, or through a simple command-line tool.

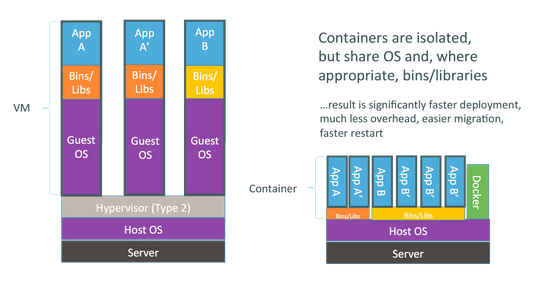

While we will continue to use this service and innovate using Google Compute Engine, the adjacent launch of Google Container Engine on August 26th, 2015, definitely caught our eye. In the past two decades, we have seen tremendous evolution in the server paradigm, with web-based architectures maturing into service orientations before finally evolving into true cloud-based architectures. Today, containers represent the next shift. Containers and virtual machines (VMs) share many similarities. That said, they are architecturally different. In particular, containers run as lightweight processes within a host Operating System (OS), whereas VMs depend on a hypervisor to emulate the x86 architecture.

Docker started the push in 2014 and over the last year many large platform companies including AWS, Google, IBM, Microsoft Red Hat and VMware have jumped onboard the container revolution. Google, backed with proven experience managing billions of containers internally, moved quickly to open up its internal tools to developers. This spawned what we now see as the full general release of Google Container Engine.

Google Container Engine is important from a business and management perspective. With Google Container Engine, a developer can create a managed cluster that’s ready for container deployment in only a few seconds. This allows teams to significantly reduce time on application set-up, while also reducing the time needed for application reliability management. This service also makes it easier for users to manage live applications, which allows development operations managers and engineers to sleep easier at night. In particular, all Google Container Engine clusters are equipped with logging capabilities so that users always know how their applications are running. And, of course, if they need to change their application, they can easily resize your cluster with more CPU.

The other big benefit that deserves to be called out is flexibility. Since many of our customers use both on-premises and public cloud infrastructures to host their applications, they need a solution that is truly flexible to allow them to scale and create applications for their various needs. These set-ups increase the complexity required for the management, migration, and analysis of data held within these stacks. This is where Kubernetes comes in. Kubernetes is an open source orchestration system created and open sourced by Google. It makes it easy for a company’s containers to work together as a single system. Together, Container Engine and Kubernetes allow our customers to scale and create applications for their various needs, providing technology managers and development operations teams with the flexibility to use on-premises, hybrid, or public cloud infrastructure. Due to its wide open source adoption, it is also easy to integrate Kubernetes into a wide variety of platforms, particularly because companies like Red Hat, Microsoft, IBM, and VMWare are all publicly supporting adoption of Kubernetes.

Each week, Google launches more than 2 billion container instances across their global data centers. With this kind of power and scale, I can’t wait to see what this service will unlock for us and our clients in the months ahead.

“Shovel” by Kelly Sikkema, is licensed under CC BY 2.0 / cropped